For greater than 20 years, few builders and designers dared contact giant information techniques because of implementation complexities, over the top calls for for succesful engineers, protracted advancement instances, and the unavailability of key architectural parts.

However in recent times, the emergence of recent giant information applied sciences has allowed a veritable explosion within the collection of giant information architectures that procedure masses of hundredsâif no longer extraâoccasions according to moment. With out cautious making plans, the use of those applied sciences may just require vital advancement efforts in execution and upkeep. Thankfully, as of lateâs answers make it somewhat easy for any dimension staff to make use of those architectural items successfully.

|

Length |

Characterised by way of |

Description |

|---|---|---|

|

2000-2007 |

The superiority of SQL databases and batch processing |

The panorama consists of MapReduce, FTP, mechanical onerous drives, and the Web Knowledge Server. |

|

2007-2014 |

The upward push of social media: Fb, Twitter, LinkedIn, and YouTube |

Footage and movies are being created and shared at an extraordinary charge by way of increasingly more ubiquitous smartphones. The primary cloud platforms, NoSQL databases, and processing engines (e.g., Apache Cassandra 2008, Hadoop 2006, MongoDB 2009, Apache Kafka 2011, AWS 2006, and Azure 2010) are launched and firms rent engineers en masse to improve those applied sciences on virtualized running techniques, maximum of that are on-site. |

|

2014-2020 |

Cloud enlargement |

Smaller firms transfer to cloud platforms, NoSQL databases, and processing engines, backing an ever wider number of apps. |

|

2020-Provide |

Cloud evolution |

Large information architects shift their center of attention towards excessive availability, replication, auto-scaling, resharding, load balancing, information encryption, diminished latency, compliance, fault tolerance, and auto-recovery. Using bins, microservices, and agile processes continues to boost up. |

Fashionable architects would have to make a choice from rolling their very own platforms the use of open-source gear or opting for a vendor-provided resolution. Infrastructure-as-a-service (IaaS) is needed when adopting open-source choices as a result of IaaS supplies the fundamental parts for digital machines and networking, permitting engineering groups the versatility to craft their structure. On the other hand, distributorsâ prepackaged answers and platform-as-a-service (PaaS) choices take away the want to acquire those elementary techniques and configure the desired infrastructure. This comfort, alternatively, comes with a bigger ticket.

Firms might successfully undertake giant information techniques the use of a synergy of cloud suppliers and cloud-native, open-source gear. This mixture lets them construct a succesful again finish with a fragment of the standard point of complexity. The trade now has appropriate open-source PaaS choices freed from seller lock-in.

In the rest of this text, we provide a large information structure that showcases ksqlDB and Kubernetes operators, which rely at the open-source Kafka and Kubernetes (K8s) applied sciences, respectively. Moreover, weâll incorporate YugabyteDB to supply new scalability and consistency features. Every of those techniques is robust independently, however their features enlarge when blended. To tie our parts in combination and simply provision our machine, we depend on Pulumi, an infrastructure-as-code (IaC) machine.

Our Pattern Challengeâs Architectural Necessities

Letâs outline hypothetical necessities for a machine to reveal a large information structure aimed toward a general-purpose software. Say we paintings for an area video-streaming corporate. On our platform, we provide localized and authentic content material, and want to observe growth capability for every video a buyer watches.

Our number one use circumstances are:

|

Stakeholder |

Use Case |

|---|---|

|

Shoppers |

Buyer content material intake generates machine occasions. |

|

3rd-party License Holders |

3rd-party license holders obtain royalties according to owned content material intake. |

|

Built-in Advertisers |

Advertisers require influence metric experiences according to consumer movements. |

Suppose that we have got 200,000 day by day customers, with a height load of 100,000 simultaneous customers. Every consumer watches two hours according to day, and we wish to observe growth with five-second accuracy. The information does no longer require sturdy accuracy (as when compared with cost techniques, as an example).

So we’ve got kind of 300 million heartbeat occasions day by day and 100,000 requests according to moment (RPS) at height instances:

300,000 customers x 1,440 heartbeat occasions generated over two day by day hours according to consumer (12 heartbeat occasions according to minute x 120 mins day by day) = 288,000,000 heartbeats according to day â 300,000,000

Shall we use easy and dependable subsystems like RabbitMQ and SQL Server, however our machine load numbers exceed the boundaries of such subsystemsâ features. If our industry and transaction load grows by way of 100%, as an example, those unmarried servers would now not have the ability to deal with the workload. We’d like horizontally scalable techniques for garage and processing, and we as builders would have to use succesful gearâor undergo the effects.

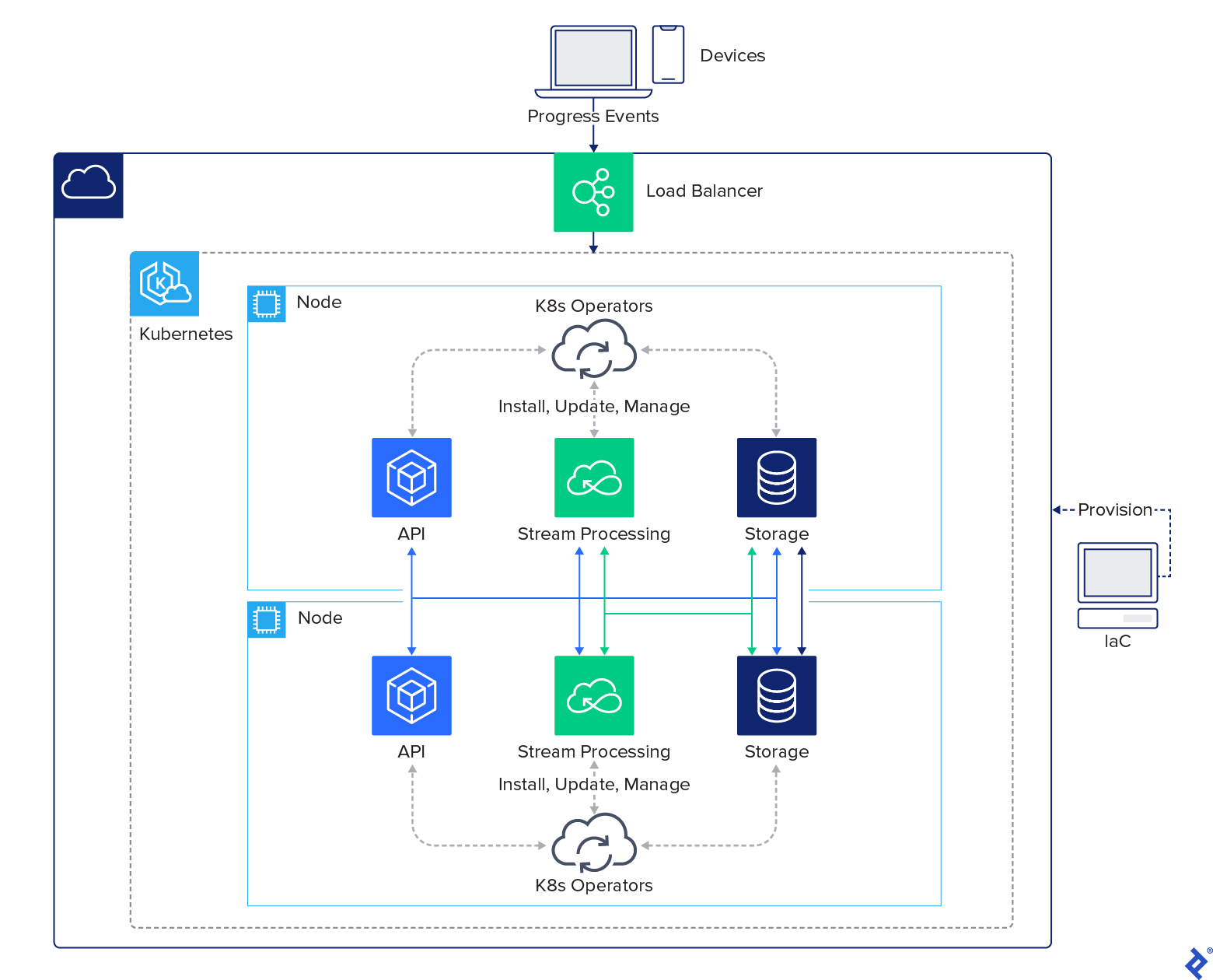

Sooner than we make a selection our particular techniques, letâs believe our high-level structure:

With our machine construction specified, we now get to buy groceries for appropriate techniques.

Knowledge Garage

Large information calls for a database. Iâve spotted a development clear of natural relational schemas towards a mix of SQL and NoSQL approaches.

SQL and NoSQL Databases

Why do firms make a selection databases of every sort?

|

SQL |

NoSQL |

|---|---|

|

|

Fashionable databases of every sort are starting to put in force one every otherâs options. The diversities between SQL and NoSQL choices are impulsively shrinking, making it more difficult to make a choice a device for our structure. Present database trade ratings point out that there are just about 400 databases to choose between.

Disbursed SQL Databases

Apparently, a brand new elegance of databases has advanced to hide all vital capability of the NoSQL and SQL techniques. A distinguishing function of this emergent elegance is a unmarried logical SQL database this is bodily disbursed throughout a couple of nodes. Whilst providing no dynamic schema, the brand new database elegance boasts those key options:

- Transactions

- Synchronous replication

- Question distribution

- Disbursed information garage

- Horizontal write scalability

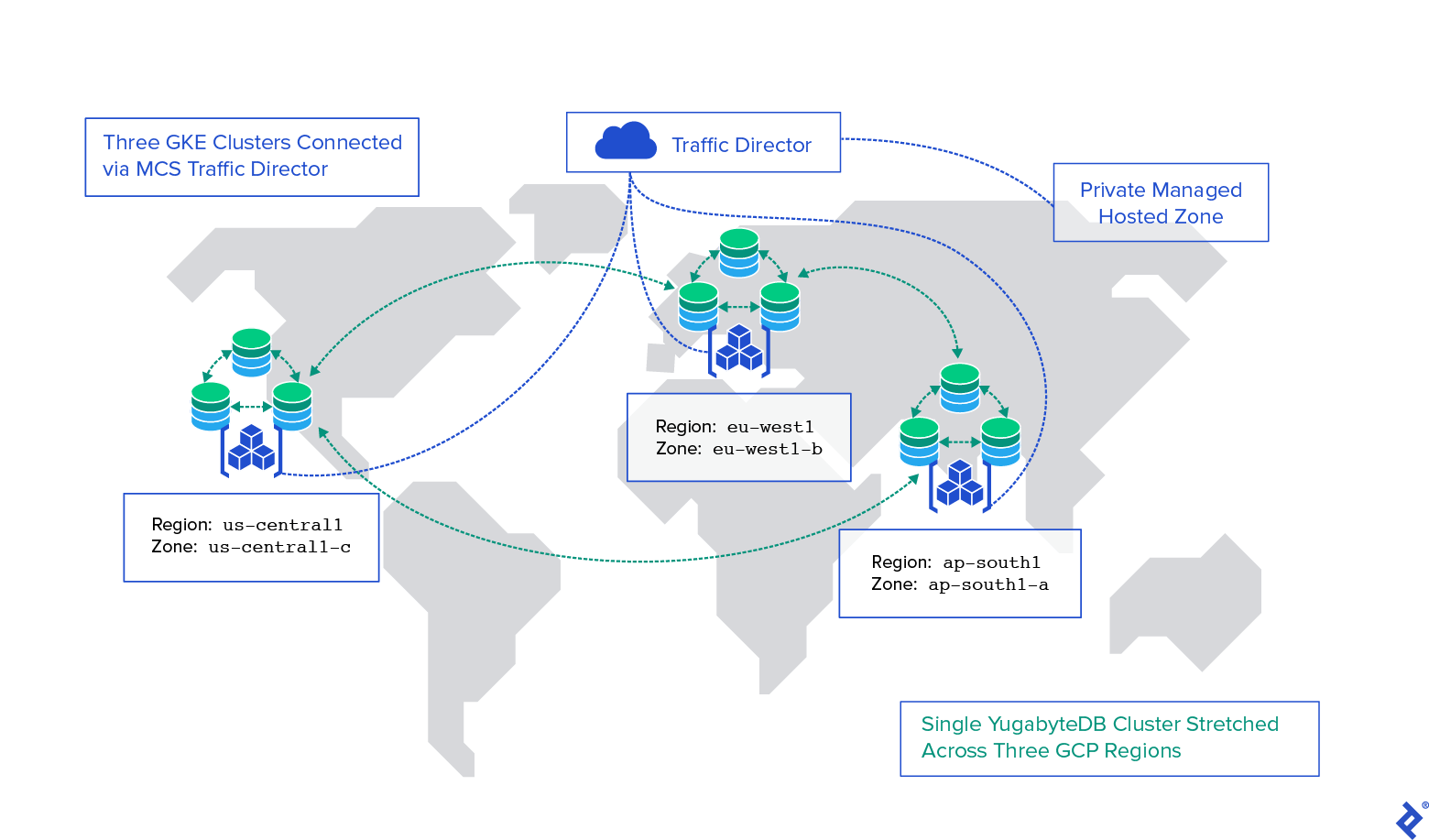

In step with our necessities, our design will have to keep away from cloud lock-in, getting rid of database products and services like Amazon Aurora or Google Spanner. Our design will have to additionally be sure that the disbursed database handles the predicted information quantity. Weâll use the performant and open supply YugabyteDB for our undertaking wishes; right hereâs what the ensuing cluster structure will seem like:

Extra exactly, we selected YugabyteDB as a result of it’s:

- PostgreSQL-compatible and works with many PostgreSQL database gear comparable to language drivers, object-relational mapping (ORM) gear, and schema-migration gear.

- Horizontally scalable, the place efficiency scales out merely as nodes are added.

- Resilient and constant in its information layer.

- Deployable in public clouds, natively with Kubernetes, or by itself controlled products and services.

- 100% open supply with tough endeavor options comparable to disbursed backups, encryption of knowledge at relaxation, in-flight TLS encryption, exchange information seize, and browse replicas.

Our selected product additionally options attributes which can be fascinating for any open-source undertaking:

- A wholesome neighborhood

- Remarkable documentation

- Wealthy tooling

- A well-funded corporate to again up the product

With YugabyteDB, we’ve got a super fit for our structure, and now we will have a look at our stream-processing engine.

Actual-time Circulate Processing

Youâll recall that our instance undertaking has 300 million day by day heartbeat occasions leading to 100,000 requests according to moment. This throughput generates a large number of information that’s not helpful to us in its uncooked shape. We will be able to, alternatively, mixture it to synthesize our desired ultimate shape: For every consumer, which segments of movies did they watch?

The usage of this manner leads to a considerably smaller information garage requirement. To translate the uncooked information into our desired layout, we would have to first put in force real-time stream-processing infrastructure.

Many smaller groups with out a giant information enjoy would possibly method this translation by way of imposing microservices subscribed to a message dealer, settling on fresh occasions from the database, after which publishing processed information to every other queue. Even though this method is modest, it forces the staff to deal with deduplication, reconnections, ORMs, secrets and techniques control, checking out, and deployment.

Extra an expert groups that method circulation processing generally tend to make a choice both the pricier possibility of AWS Kinesis or the extra inexpensive Apache Spark Structured Streaming. Apache Spark is open supply, but vendor-specific. For the reason that purpose of our structure is to make use of open-source parts that let us the versatility of opting for our web hosting spouse, we can have a look at a 3rd, attention-grabbing selection: Kafka together with Confluentâs open-source choices that come with schema registry, Kafka Attach, and ksqlDB.

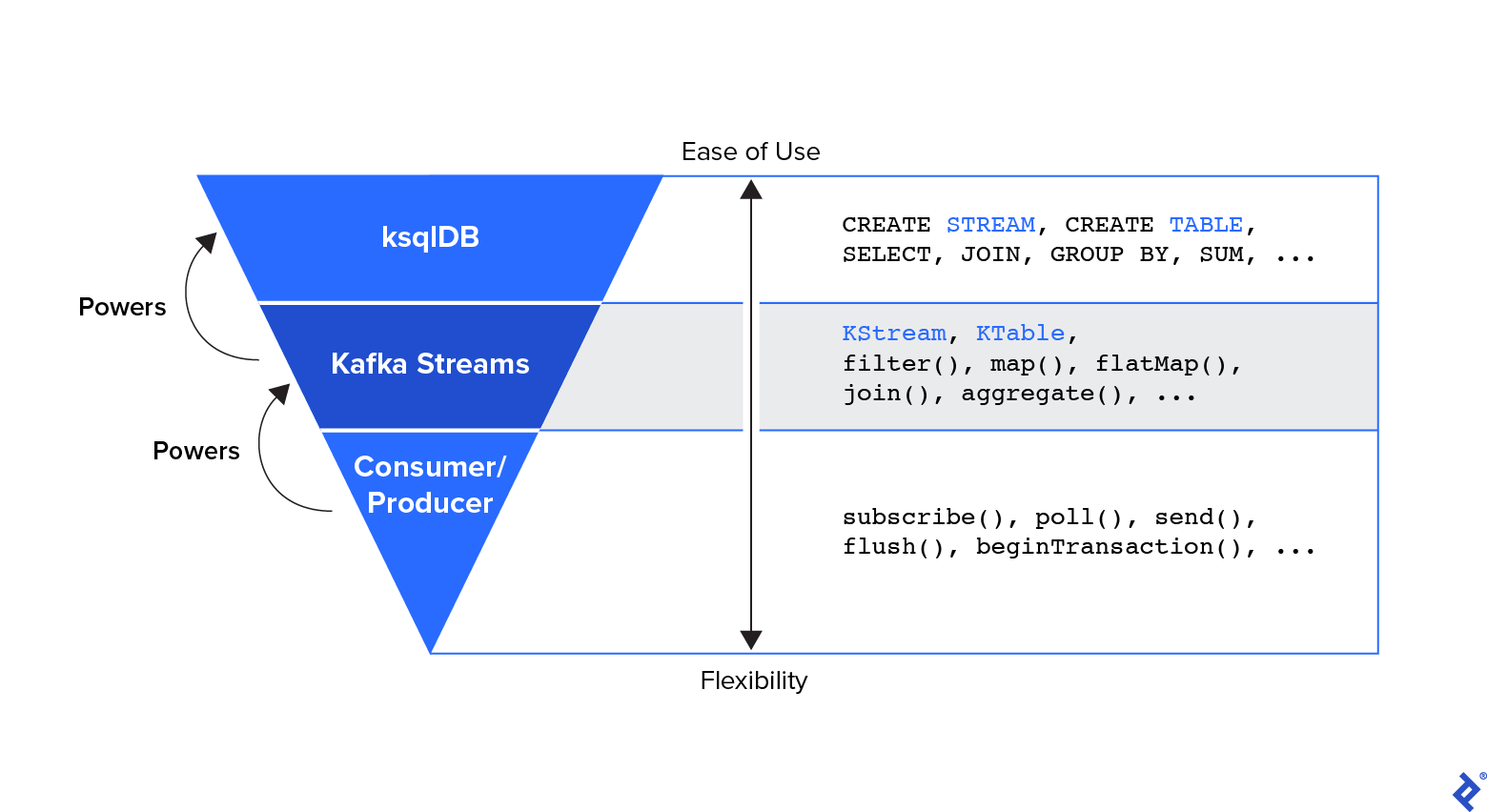

Kafka itself is only a disbursed log machine. Conventional Kafka stores use Kafka Streams to put in force their circulation processing, however we can use ksqlDB, a extra complex software that subsumes Kafka Streamsâ capability:

Extra particularly, ksqlDBâa server, no longer a libraryâis a stream-processing engine that permits us to put in writing processing queries in an SQL-like language. All of our purposes run within a ksqlDB cluster that, generally, we bodily place with regards to our Kafka cluster, so that you can maximize our information throughput and processing efficiency.

Weâll retailer any information we procedure in an exterior database. Kafka Attach lets in us to try this simply by way of performing as a framework to glue Kafka with different databases and exterior techniques, comparable to key-value retail outlets, seek indices, and document techniques. If we wish to import or export a subject matterâa âcirculationâ in Kafka parlanceâright into a database, we donât want to write any code.

In combination, those parts let us ingest and procedure the knowledge (as an example, crew heartbeats into window classes) and save to the database with out writing our personal conventional products and services. Our machine can deal with any workload as a result of it’s disbursed and scalable.

Kafka isn’t best possible. It’s complicated and calls for deep wisdom to arrange, paintings with, and care for. As weâre no longer keeping up our personal manufacturing infrastructure, weâll use controlled products and services from Confluent. On the similar time, Kafka has an enormous neighborhood and an unlimited selection of samples and documentation that may assist us in almost about any state of affairs.

Now that we have got coated our core architectural parts, letâs have a look at operational gear to make our lives more effective.

Infrastructure-as-code: Pulumi

Infrastructure-as-code (IaC) allows DevOps groups to deploy and organize infrastructure with easy directions at scale throughout a couple of suppliers. IaC is a crucial absolute best apply of any cloud-development undertaking.

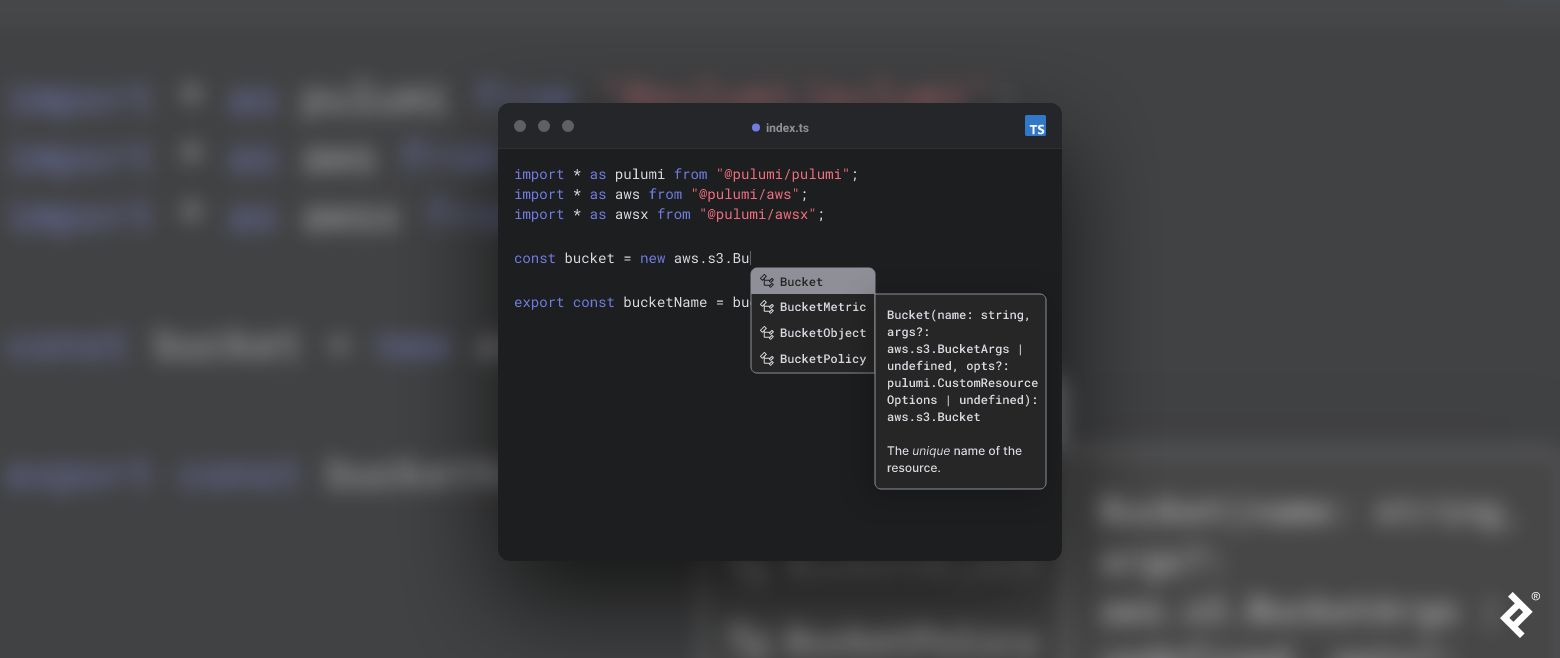

Maximum groups that use IaC generally tend to head with Terraform or a cloud-native providing like AWS CDK. Terraform calls for we write in its product-specific language, and AWS CDK most effective works inside the AWS ecosystem. We choose a device that permits higher flexibility in writing our deployment specs and doesnât lock us into a particular seller. Pulumi completely suits those necessities.

Pulumi is a cloud-native platform that permits us to deploy any cloud infrastructure, together with digital servers, bins, packages, and serverless purposes.

We donât want to be told a brand new language to paintings with Pulumi. We will be able to use certainly one of our favorites:

- Python

- JavaScript

- TypeScript

- Move

- .NET/C#

- Java

- YAML

So how will we put Pulumi to paintings? For instance, say we wish to provision an EKS cluster in AWS. We might:

- Set up Pulumi.

- Set up and configure AWS CLI.

- Pulumi is solely an clever wrapper on most sensible of supported suppliers.

- Some suppliers require calls to their HTTP API, and a few, like AWS, depend on its CLI.

- Run

pulumi up.- The Pulumi engine reads its present state from garage, calculates the adjustments made to our code, and makes an attempt to use the ones adjustments.

In a great global, our infrastructure could be put in and configured via IaC. Weâd retailer our whole infrastructure description in Git, write unit assessments, use pull requests, and create the entire setting the use of one click on in our steady integration and steady deployment software.

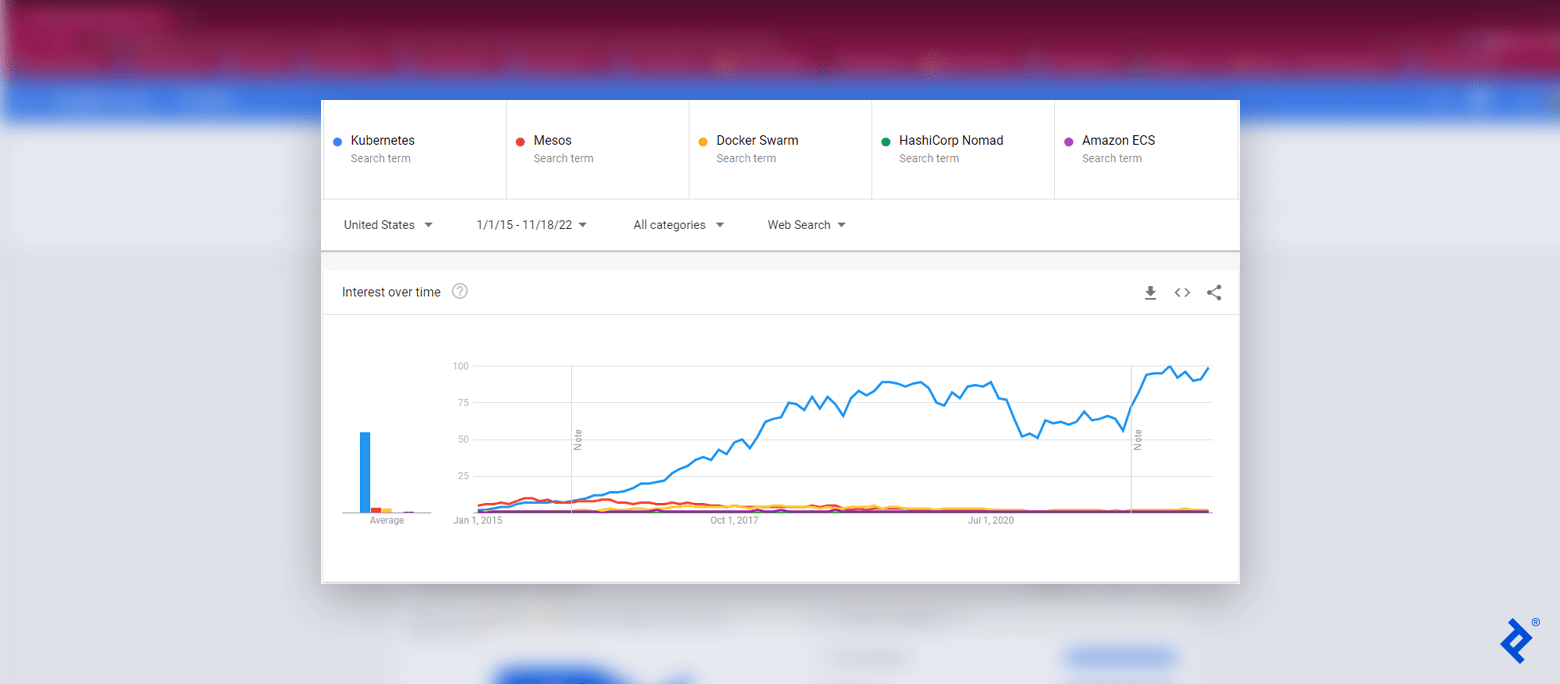

Kubernetes Operators

Kubernetes is a cloud software running machine. It may be self-managed, controlled, or naked steel, or within the cloud, K3s, or OpenShift. However the core is at all times Kubernetes. Out of doors of uncommon cases involving serverless, legacy, and vendor-specific techniques, Kubernetes is a must have element when development cast structure, and is most effective rising in reputation.

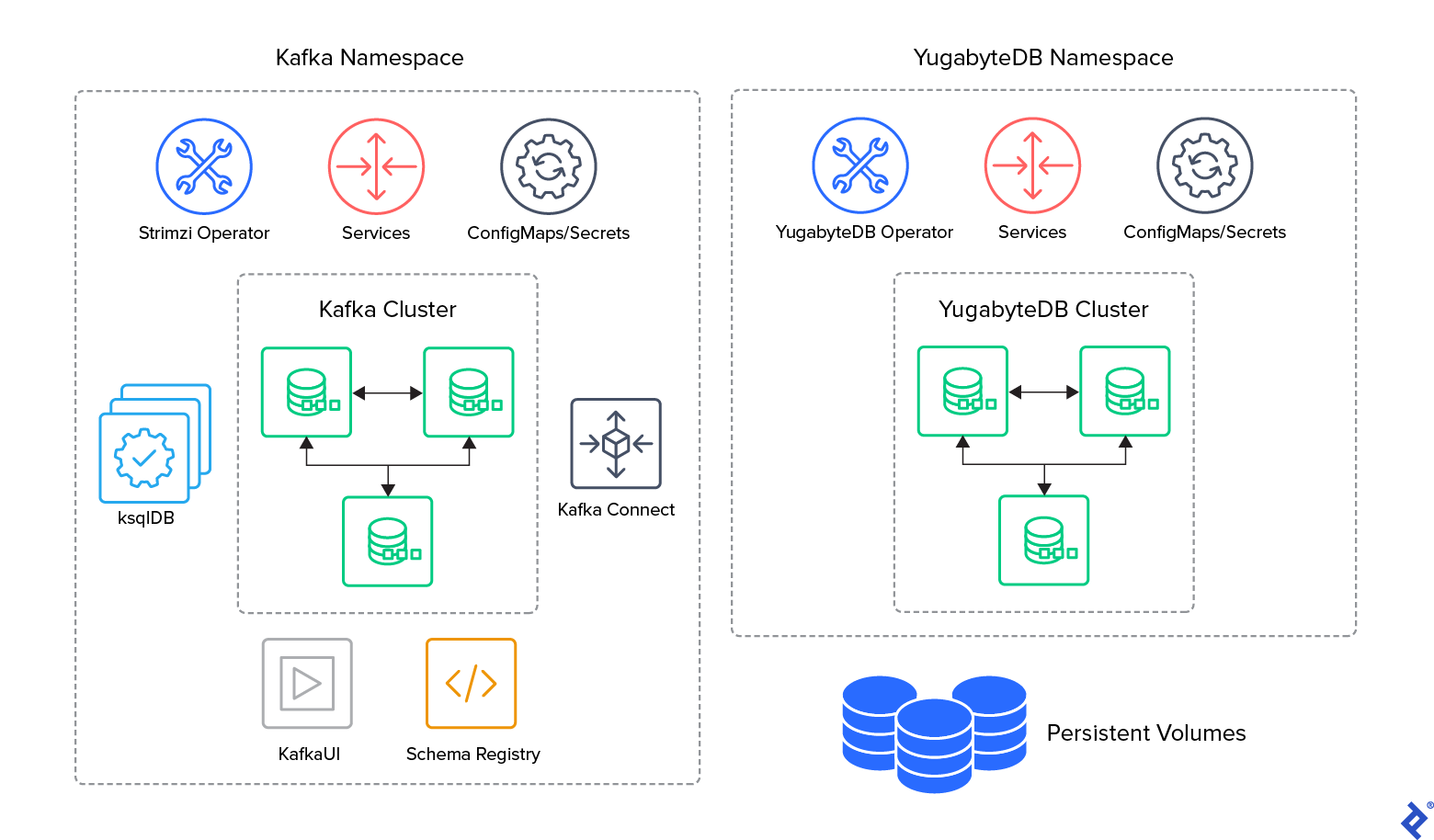

We will be able to deploy all of our stateful and stateless products and services to Kubernetes. For our stateful products and services (i.e., YugabyteDB and Kafka), we can use an extra subsystem: Kubernetes operators.

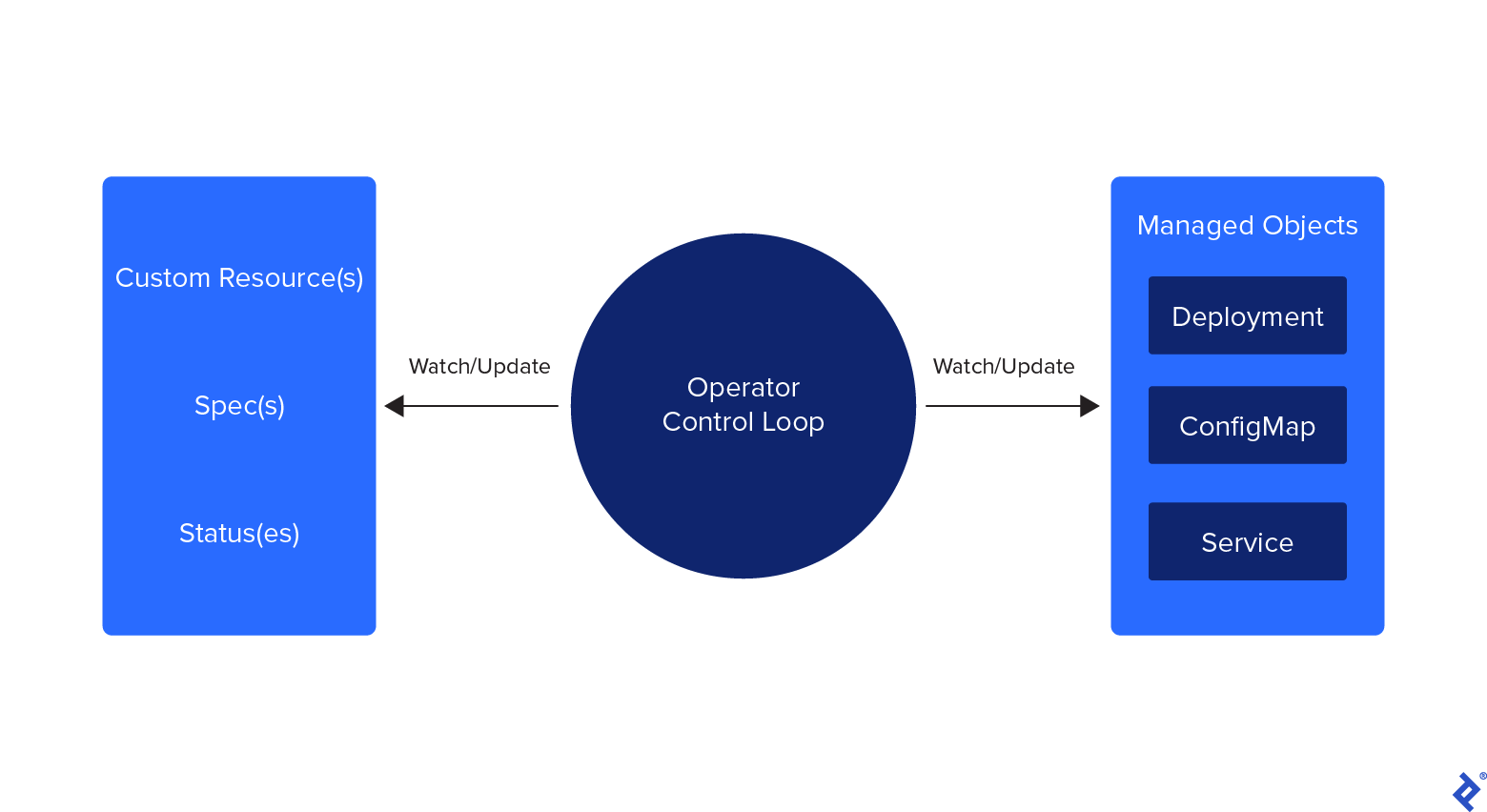

A Kubernetes operator is a program that runs in and manages different sources in Kubernetes. For instance, if we wish to set up a Kafka cluster with all its parts (e.g., schema registry, Kafka Attach), we might want to oversee masses of sources, comparable to stateful units, products and services, PVCs, volumes, config maps, and secrets and techniques. Kubernetes operators assist us by way of doing away with the overhead of managing those products and services.

Stateful machine publishers and endeavor builders are the main writers of those operators. Common builders and IT groups can leverage those operators to extra simply organize their infrastructures. Operators permit for a simple, declarative state definition this is then used to provision, configure, replace, and organize their related techniques.

Within the early giant information days, builders controlled their Kubernetes clusters with uncooked manifest definitions. Then Helm entered the image and simplified Kubernetes operations, however there used to be nonetheless room for additional optimization. Kubernetes operators got here into being and, in live performance with Helm, made Kubernetes a generation that builders may just briefly put into apply.

To reveal how pervasive those operators are, we will see that every machine offered on this article already has its launched operators:

Having mentioned all vital parts, we might now read about an outline of our machine.

Our Structure With Most well-liked Programs

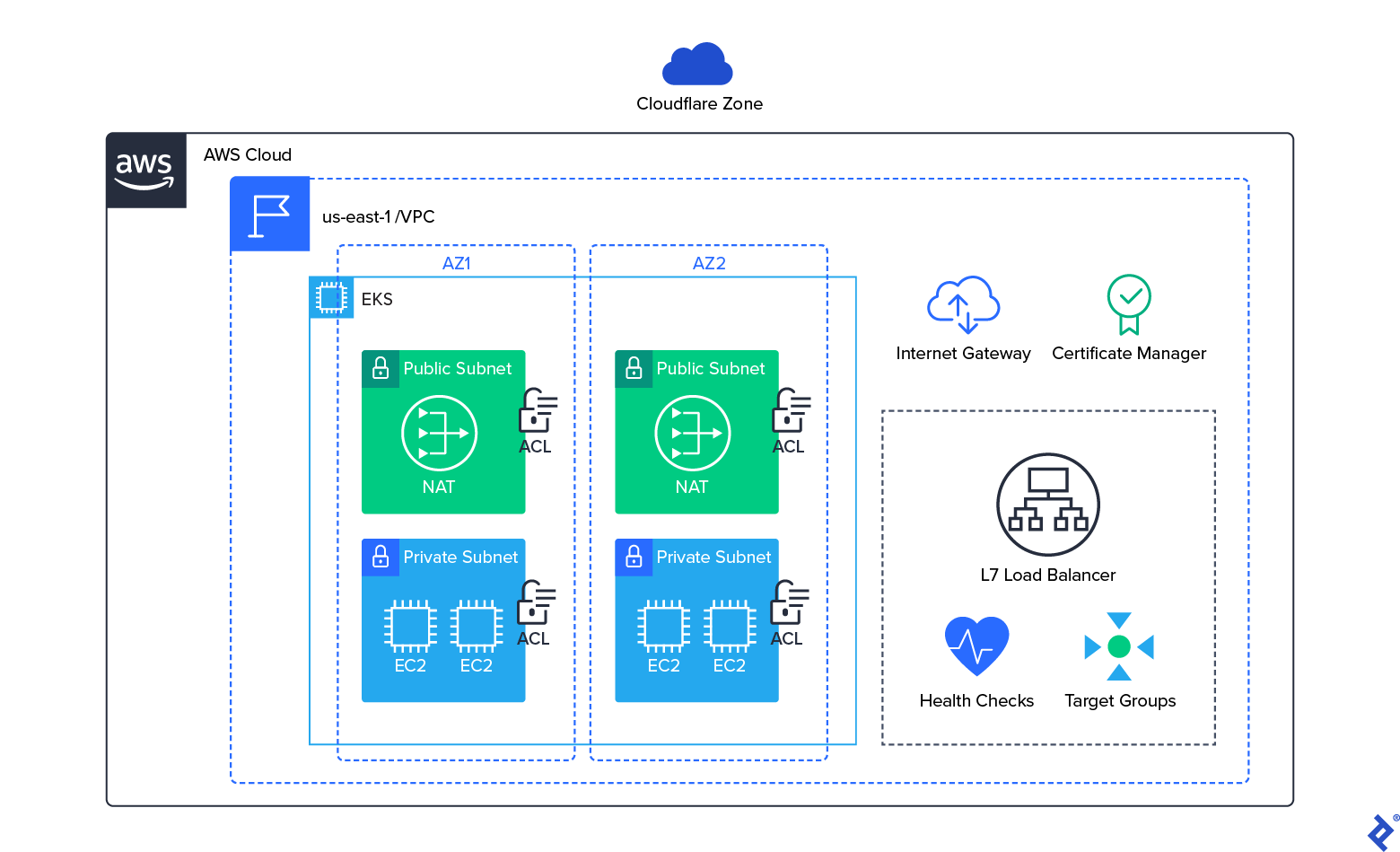

Despite the fact that our design accommodates many parts, our machine is somewhat easy within the total structure diagram:

Specializing in our Kubernetes setting, we will merely set up our Kubernetes operators, Strimzi and YugabyteDB, and they’re going to do the remainder of the paintings to put in the remainder products and services. Our total ecosystem inside of our Kubernetes setting is as follows:

This deployment describes a disbursed cloud structure made easy the use of as of lateâs applied sciences. Enforcing what used to be inconceivable as not too long ago as 5 years in the past might most effective take only some hours as of late.

The editorial staff of the Toptal Engineering Weblog extends its gratitude to David Prifti and Deepak Agrawal for reviewing the technical content material and code samples offered on this article.