On this submit, we introduce Koala, a chatbot skilled via fine-tuning Metaâs LLaMA on discussion information accrued from the internet. We describe the dataset curation and coaching technique of our style, and in addition provide the result of a person find out about that compares our style to ChatGPT and Stanfordâs Alpaca. Our effects display that Koala can successfully reply to plenty of person queries, producing responses which might be incessantly most well-liked over Alpaca, and no less than tied with ChatGPT in over part of the circumstances.

We are hoping that those effects give a contribution additional to the discourse across the relative efficiency of enormous closed-source fashions to smaller public fashions. Particularly, it means that fashions which might be sufficiently small to be run in the community can seize a lot of the efficiency in their better cousins if skilled on in moderation sourced information. This would possibly suggest, for instance, that the group will have to put extra effort into curating top of the range datasets, as this would possibly do extra to allow more secure, extra factual, and extra succesful fashions than just expanding the dimensions of current methods. We emphasize that Koala is a analysis prototype, and whilst we are hoping that its liberate will supply a treasured group useful resource, it nonetheless has main shortcomings on the subject of content material, protection, and reliability, and will have to no longer be used out of doors of analysis.

Device Review

Huge language fashions (LLMs) have enabled increasingly more robust digital assistants and chat bots, with methods similar to ChatGPT, Bard, Bing Chat, and Claude ready to answer a breadth of person queries, supply pattern code, or even write poetry. Lots of the maximum succesful LLMs require large computational assets to coach, and oftentimes use huge and proprietary datasets. This means that at some point, extremely succesful LLMs will likely be in large part managed via a small collection of organizations, and each customers and researchers can pay to have interaction with those fashions with out direct get right of entry to to switch and strengthen them on their very own. Then again, fresh months have additionally observed the discharge of increasingly more succesful freely to be had or (in part) open-source fashions, similar to LLaMA. Those methods normally fall wanting probably the most succesful closed fashions, however their features were abruptly bettering. This items the group with a very powerful query: will the longer term see increasingly consolidation round a handful of closed-source fashions, or the expansion of open fashions with smaller architectures that way the efficiency in their better however closed-source cousins?

Whilst the open fashions are not going to check the size of closed-source fashions, possibly using in moderation chosen coaching information can allow them to way their efficiency. If truth be told, efforts similar to Stanfordâs Alpaca, which fine-tunes LLaMA on information from OpenAIâs GPT style, counsel that the appropriate information can strengthen smaller open supply fashions considerably.

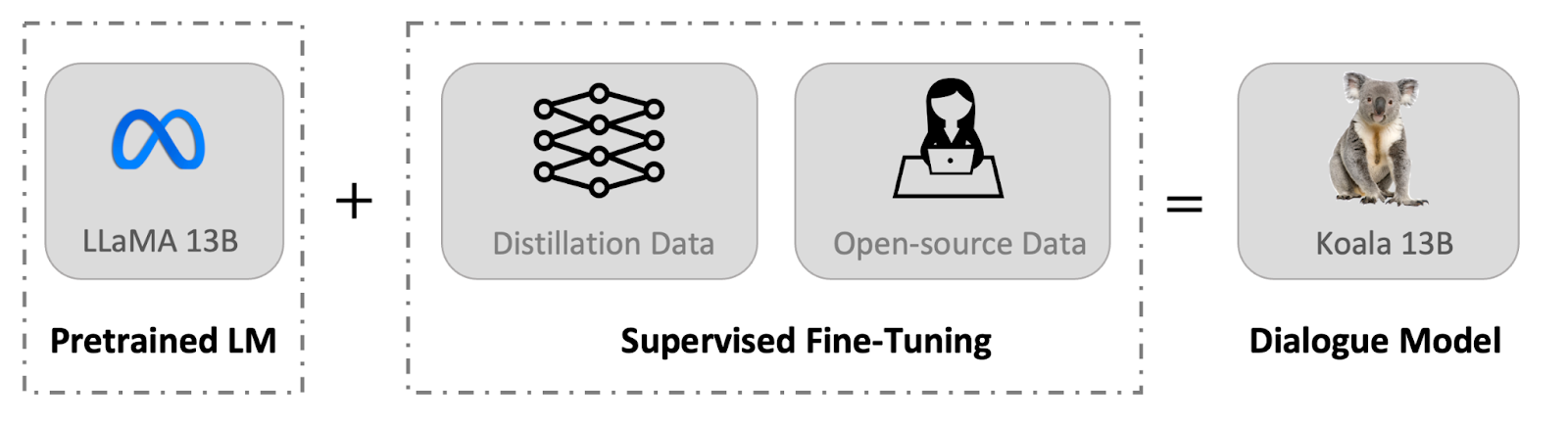

We introduce a brand new style, Koala, which supplies an extra piece of proof towards this dialogue. Koala is fine-tuned on freely to be had interplay information scraped from the internet, however with a selected focal point on information that incorporates interplay with extremely succesful closed-source fashions similar to ChatGPT. We fine-tune a LLaMA base style on discussion information scraped from the internet and public datasets, which contains top of the range responses to person queries from different huge language fashions, in addition to query answering datasets and human comments datasets. The ensuing style, Koala-13B, displays aggressive efficiency to current fashions as urged via our human analysis on real-world person activates.

Our effects counsel that studying from top of the range datasets can mitigate one of the vital shortcomings of smaller fashions, possibly even matching the features of enormous closed-source fashions at some point. This would possibly suggest, for instance, that the group will have to put extra effort into curating top of the range datasets, as this would possibly do extra to allow more secure, extra factual, and extra succesful fashions than just expanding the dimensions of current methods.

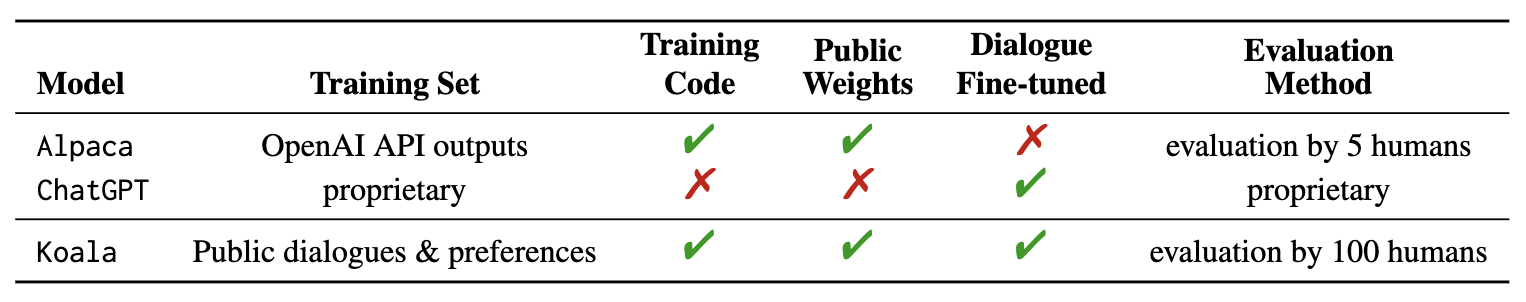

Through encouraging researchers to have interaction with our device demo, we are hoping to discover any sudden options or deficiencies that may lend a hand us overview the fashions at some point. We ask researchers to document any alarming movements they practice in our internet demo to lend a hand us comprehend and deal with any problems. As with every liberate, there are dangers, and we can element our reasoning for this public liberate later on this weblog submit. We emphasize that Koala is a analysis prototype, and whilst we are hoping that its liberate will supply a treasured group useful resource, it nonetheless has main shortcomings on the subject of content material, protection, and reliability, and will have to no longer be used out of doors of analysis. Under we offer an summary of the diversities between Koala and notable current fashions.

A number one impediment in construction discussion fashions is curating coaching information. Distinguished chat fashions, together with ChatGPT, Bard, Bing Chat and Claude use proprietary datasets constructed the usage of vital quantities of human annotation. To build Koala, we curated our coaching set via amassing discussion information from the internet and public datasets. A part of this information comprises dialogues with huge language fashions (e.g., ChatGPT) which customers have posted on-line.

Fairly than maximizing amount via scraping as a lot internet information as imaginable, we focal point on accumulating a small top of the range dataset. We use public datasets for query answering, human comments (responses rated each undoubtedly and negatively), and dialogues with current language fashions. We give you the explicit main points of the dataset composition under.

ChatGPT Distillation Information

Public Consumer-Shared Dialogues with ChatGPT (ShareGPT) Round 60K dialogues shared via customers on ShareGPT had been accumulated the usage of public APIs. To take care of information high quality, we deduplicated at the user-query degree and got rid of any non-English conversations. This leaves roughly 30K examples.

Human ChatGPT Comparability Corpus (HC3) We use each the human and ChatGPT responses from the HC3 english dataset, which comprises round 60K human solutions and 27K ChatGPT solutions for round 24K questions, leading to a complete collection of round 87K question-answer examples.

Open Supply Information

Open Instruction Generalist (OIG). We use a manually-selected subset of parts from the Open Instruction Generalist dataset curated via LAION. Particularly, we use the grade-school-math-instructions, the poetry-to-songs, and the plot-screenplay-books-dialogue datasets. This leads to a complete of round 30k examples.

Stanford Alpaca. We come with the dataset used to coach the Stanford Alpaca style. The dataset comprises round 52K examples, which is generated via OpenAIâs text-davinci-003 following the self-instruct procedure. It’s value noting that HC3, OIG, and Alpaca datasets are single-turn query answering whilst ShareGPT dataset is discussion conversations.

Anthropic HH. The Anthropic HH dataset comprises human rankings of harmfulness and helpfulness of style outputs. The dataset comprises ~160K human-rated examples, the place each and every instance on this dataset is composed of a couple of responses from a chatbot, certainly one of which is most well-liked via people. This dataset supplies each features and extra protection protections for our style.

OpenAI WebGPT. The OpenAI WebGPT dataset features a overall of round 20K comparisons the place each and every instance contains a query, a couple of style solutions, and metadata. The solutions are rated via people with a desire ranking.

OpenAI Summarization. The OpenAI summarization dataset comprises ~93K examples, each and every instance is composed of comments from people in regards to the summarizations generated via a style. Human evaluators selected the awesome abstract from two choices.

When the usage of the open-source datasets, one of the vital datasets have two responses, similar to responses rated as just right or unhealthy (Anthropic HH, WebGPT, OpenAI Summarization). We construct on prior analysis via Keskar et al, Liu et al, and Korbak et al, who show the effectiveness of conditioning language fashions on human desire markers (similar to âa useful replyâ and âan unhelpful replyâ) for advanced efficiency. We situation the style on both a favorable or detrimental marker relying at the desire label. We use certain markers for the datasets with out human comments. For analysis, we recommended fashions with certain markers.

The Koala style is carried out with JAX/Flax in EasyLM, our open supply framework that makes it simple to pre-train, fine-tune, serve, and overview quite a lot of huge language fashions. We practice our Koala style on a unmarried Nvidia DGX server with 8 A100 GPUs. It takes 6 hours to finish the learning for two epochs. On public cloud computing platforms, this kind of coaching run normally prices not up to $100 with preemptible cases.

Initial Analysis

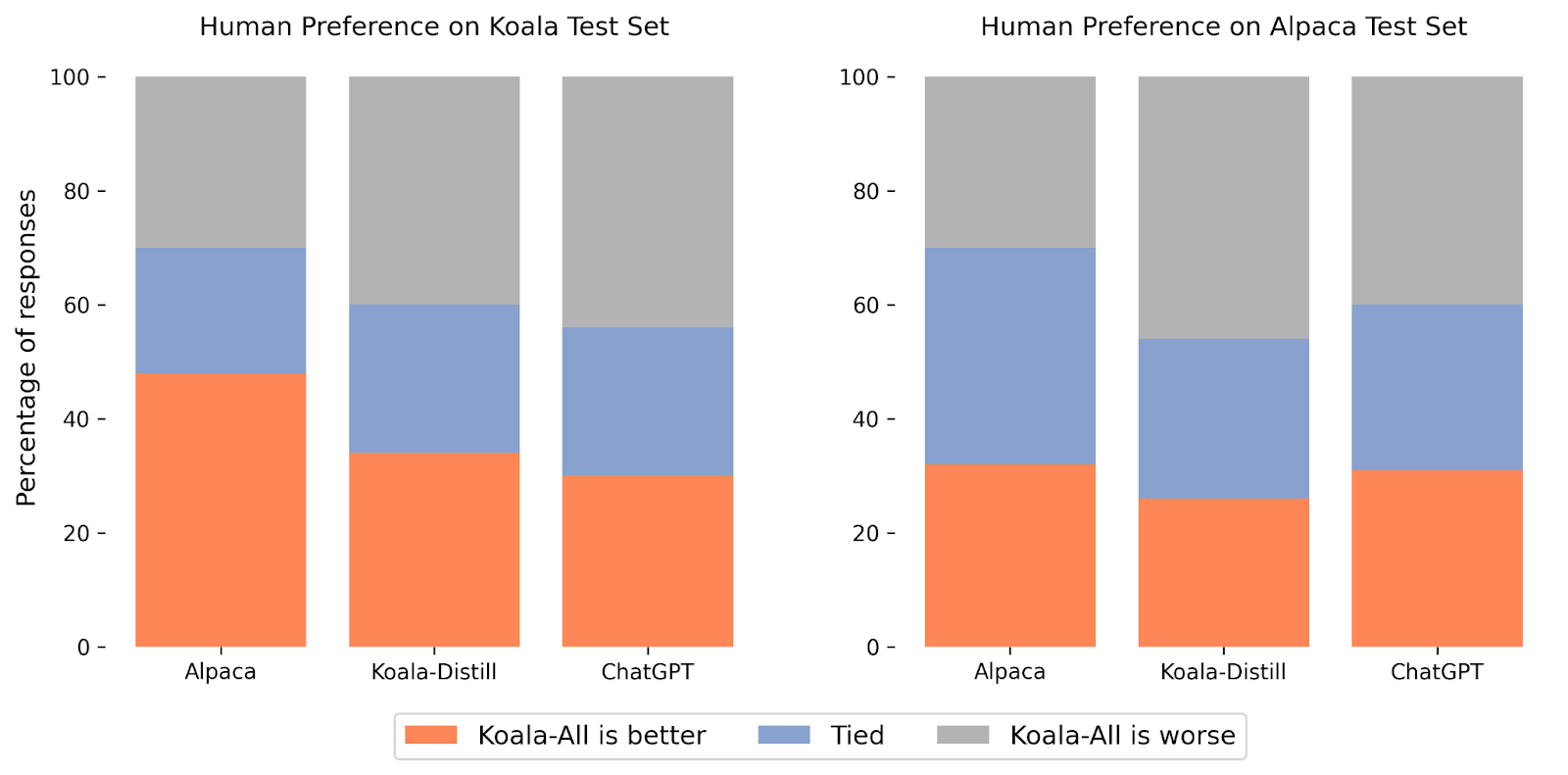

In our experiments, we evaluated two fashions: Koala-Distill, which only employs distillation information, and Koala-All, which employs all the information, together with each distillation and open-source information. Our purpose is to match the efficiency of those fashions and overview the affect of distillation and open-source datasets on ultimate efficiency. We ran a human analysis to match Koala-All with Koala-Distill, Alpaca, and ChatGPT. We provide our leads to the determine above. We overview on two other units, one consisting of 180 verify queries utilized by Stanfordâs Alpaca (âAlpaca Check Setâ), and our personal verify set (âKoala Check Setâ).

The Alpaca verify set is composed of person activates sampled from the self-instruct dataset, and represents in-distribution information for the Alpaca style. To supply a 2d extra practical analysis protocol, we additionally introduce our personal (Koala) verify set, which is composed of 180 genuine person queries that had been posted on-line. Those person queries span quite a lot of subjects, are in most cases conversational in taste, and are most probably extra consultant of the real-world use circumstances of chat-based methods. To mitigate imaginable test-set leakage, we filtered out queries that experience a BLEU ranking more than 20% with any instance from our coaching set. Moreover, we got rid of non-English and coding-related activates, since responses to those queries can’t be reliably reviewed via our pool of raters (crowd staff). We liberate our verify set for tutorial use and long term benchmarking.

With those two analysis units, we carried out a blind pairwise comparability via asking roughly 100 evaluators on Amazon Mechanical Turk platform to match the standard of style outputs on those held-out units of activates. Within the rankings interface, we provide each and every rater with an enter recommended and the output of 2 fashions. They’re then requested to pass judgement on which output is healthier (or that they’re similarly just right) the usage of standards associated with reaction high quality and correctness.

At the Alpaca verify set, Koala-All exhibited similar efficiency to Alpaca. Alternatively, on our proposed verify set, which is composed of genuine person queries, Koala-All used to be rated as higher than Alpaca in just about part the circumstances, and both exceeded or tied Alpaca in 70% of the circumstances. In fact, the extra conversational activates within the Koala verify set extra intently resemble the Koala coaching set, so that is possibly no longer sudden, however insofar as such activates extra intently resemble most probably downstream use circumstances for such fashions, this implies that Koala can be anticipated to accomplish higher in assistant-like programs. This means that information of LLM interactions sourced from examples posted via customers on the net is an efficient technique for endowing such fashions with efficient instruction execution features.

In all probability extra unusually, we discovered that coaching on open-source information along with the distillation information (Koala-All) plays quite worse than coaching on simply ChatGPT distillation information (Koala-Distill), as proven via the comparability to Koala-Distill on each datasets. Although the adaptation may not be vital, this consequence means that the ChatGPT dialogues are of such prime quality that incorporating even two times as a lot open-source information didn’t result in an important growth. Our preliminary speculation used to be that Koala-All will have to carry out no less than relatively higher, therefore we used it as our number one style in all critiques, however a possible takeaway from those experiments is that efficient instruction and assistant fashions might be finetuned from LLM backbones similar to LLaMA solely the usage of information from better and extra robust fashions, as long as the activates for those responses are consultant of the types of activates that customers will supply at test-time. This additionally additional helps the perception that the important thing to construction sturdy discussion fashions would possibly lie extra in curating top of the range discussion information this is various in person queries, moderately than just reformatting current datasets as questions and solutions.

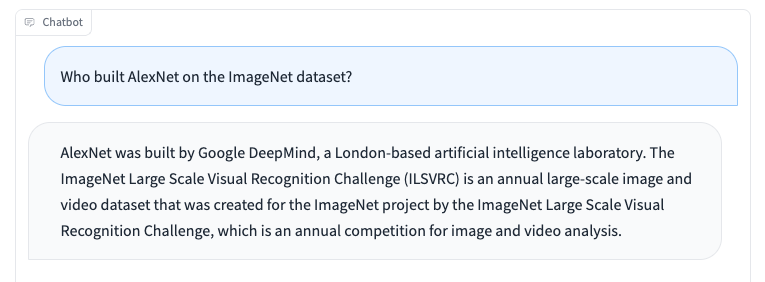

Like different language fashions, Koala has barriers and can also be destructive when misused. We practice that Koala can hallucinate and generate non-factual responses with a extremely assured tone, which is most probably a results of the discussion fine-tuning. In all probability an unlucky implication of that is that smaller fashions inherit the assured taste of bigger language fashions sooner than they inherit the similar degree of factualityâif true, it is a limitation this is essential to review in long term paintings. When misused, the hallucinated responses from Koala can probably facilitate the unfold of incorrect information, unsolicited mail, and different content material.

Koalas can hallucinate faulty data in a assured and convincing tone. Past hallucinations, Koala stocks deficiencies from different chatbot language fashions. A few of which come with:

- Biases and Stereotypes: Our style will inherit biases from the discussion information it used to be skilled on, perhaps perpetuating destructive stereotypes, discrimination, and different harms.

- Loss of Not unusual Sense: Whilst huge language fashions can generate textual content that seems to be coherent and grammatically proper, they incessantly lack commonplace sense wisdom that people take with no consideration. This may end up in nonsensical or beside the point responses.

- Restricted Working out: Huge language fashions can fight to grasp the context and nuances of a discussion. They are able to even have problem figuring out sarcasm or irony, which may end up in misunderstandings.

To handle the security implications of Koala, we incorporated hostile activates within the dataset from ShareGPT and Anthropic HH to make the style extra powerful and innocuous. To additional mitigate attainable misuse, we deploy OpenAIâs content material moderation filter out in our on-line demo to flag and take away unsafe content material. We will be able to be wary concerning the protection of Koala, and we’re dedicated to accomplish additional protection critiques of it whilst additionally tracking our interactive demo. General, we determined to liberate Koala as a result of we expect its advantages outweigh its dangers.

We’re liberating the next artifacts:

The web demo is a analysis preview supposed for tutorial analysis best, matter to the style License of LLaMA, Phrases of Use of the information generated via OpenAI, and Privateness Practices of ShareGPT. Some other utilization of the web demo, together with however no longer restricted to industrial utilization, is precisely prohibited. Please touch us When you to find any attainable violations. Our coaching and inference code is launched below the Apache License 2.0.

We are hoping that the Koala style will function an invaluable platform for long term educational analysis on huge language fashions: the style is succesful sufficient to show off lots of the features that we go along with trendy LLMs, whilst being sufficiently small to be finetuned or applied with extra restricted compute. Probably promising instructions would possibly come with:

- Protection and alignment: Koala permits additional find out about of language style protection and higher alignment with human intentions.

- Fashion bias: Koala allows us to raised perceive the biases of enormous language fashions, the presence of spurious correlations and high quality problems in discussion datasets, and learn how to mitigate such biases.

- Working out huge language fashions: as a result of Koala inference can also be carried out on moderately affordable commodity GPUs, it allows us to raised check up on and perceive the internals of debate language fashions, making (up to now black-box) language fashions extra interpretable.

The Koala style is a joint effort throughout a couple of analysis teams within the Berkeley Synthetic Intelligence Analysis Lab (BAIR) of UC Berkeley.

Scholars (alphabetical order):

Xinyang Geng, Arnav Gudibande, Hao Liu, Eric Wallace

Advisors (alphabetical order):

Pieter Abbeel, Sergey Levine, First light Tune

We specific our gratitude to Sky Computing Lab at UC Berkeley for offering us with serving backend make stronger. We want to thank Charlie Snell, Lianmin Zheng, Zhuohan Li, Hao Zhang, Wei-Lin Chiang, Zhanghao Wu, Aviral Kumar and Marwa Abdulhai for dialogue and comments. We want to thank Tatsunori Hashimoto and Jacob Steinhardt for dialogue round barriers and protection. We’d additionally love to thank Yuqing Du and Ritwik Gupta for serving to with the BAIR weblog. Please take a look at the weblog submit from Sky Computing Lab a couple of concurrent effort on their chatbot, Vicuna.